Artificial Intelligence as a pantheon of collective peer-pressure

How does AI relate to a fictional pantheon?

A brief, fictional history

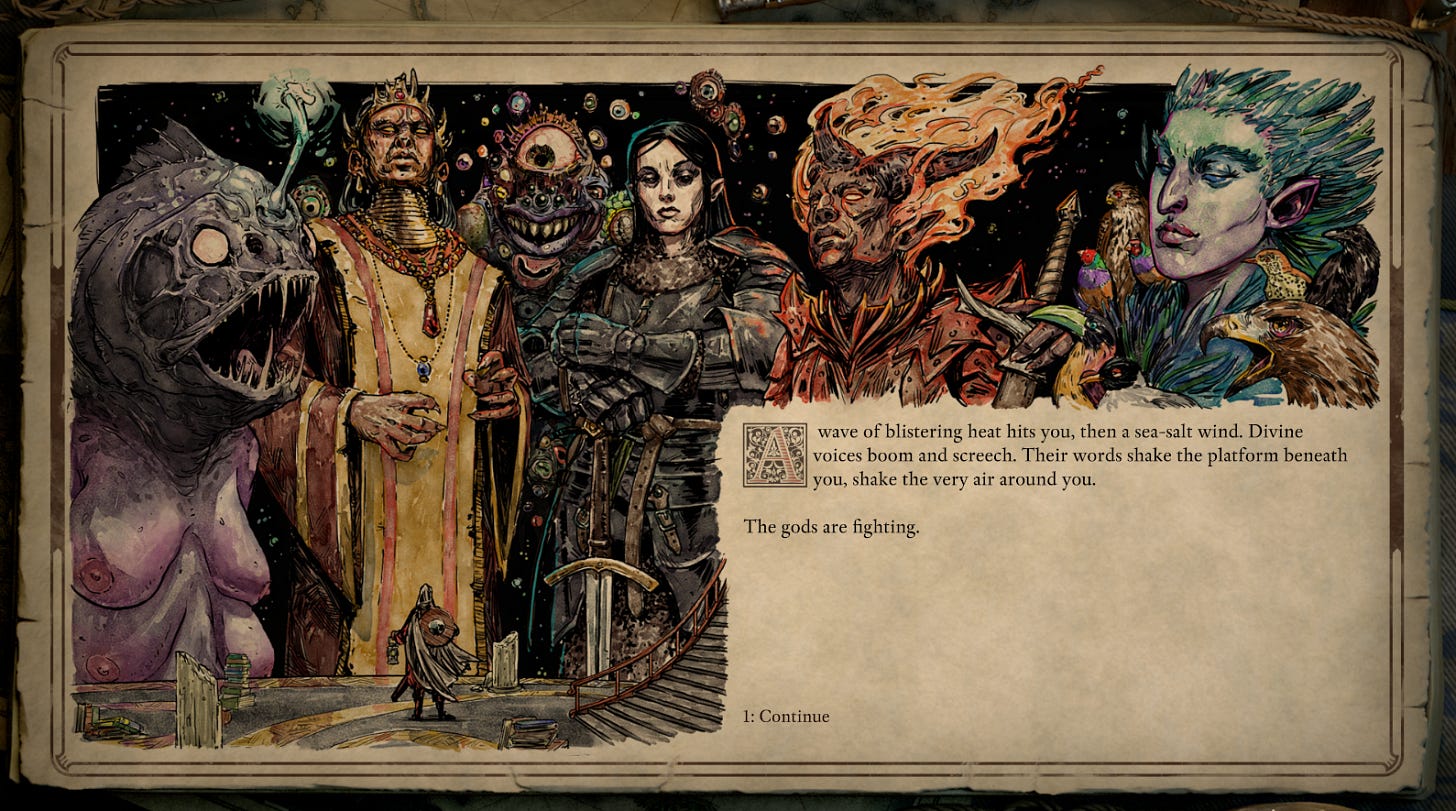

The video-game, Pillars of Eternity, is set in a mostly typical sword & sorcery setting that was designed to recapture to magic of older classics like Baldur’s Gate and Planescape Torment. Headed by writers like Chris Avellone and Josh Sawyer; it’s no wonder that the worldbuilding was a little different than what you’d expect in a Tolkein-esque fantasy setting.

But right now, there is only one thing you need to know about the world of Eora— its gods aren’t real. And when I say they aren’t real, I don’t mean they’re fictional. Of course, they are fictional; but within the setting, the gods aren’t really gods. Let me explain in a mini lore dump, and then we can move on to how this relates to AI.

You see, in ancient Eora, there was a civilization known as the Engwithans. The Engwithans were skilled at a mix of in-universe science & magic called animancy— the study of and magical use of the soul. They also deserve an honorable mention for their mathematics and industriousness. Eventually, their continued effort of understanding the world around them resulted in a discovery equally monumental and distressful— their gods were not real. Suddenly, a universe that had seemed well-ordered and logical was now chaotic and out of control. Reincarnation (which, in-universe, is as real and accepted as the water cycle) was not guided; there were no lessons to be learned before being shot into another body for another life. It just happened. Forever. It was a never ending cycle of suffering, all in a world without meaning.

This was a problem. And the Engwithians didn’t handle it well. A select group of animancers came up with a game plan to “solve” the problem of the gods’ empty driver seat. If the gods didn’t actually exist, well, why not make some that did? The goal would be to create gods that *could* guide reincarnation. Gods that could guide society over the ages to become better and more efficient. Gods that could provide meaning to a terrible and empty vastness.

How shall we console ourselves?

Fast forward a bit. This conspiracy is worked at different angles for years until finally, the moment comes. Through a bunch of in-universe magic that would take way too long to explain; this small team of animancers pulled a Jonestown on the entirety of Engwithan civilization. Except when the Engwithans drank the flavor-aid, it wasn’t just death. Their souls were taken, mashed up into a pantheon of massive, collective singularities, each with its own identity. These singularities took the names of gods from the Engwithan religion, and acted in such a capacity. Suddenly, Ondra, the goddess of the sea was actually controlling currents and sea-wind; and Berath, the god of cyclical death, actively controlled reincarnation. Those with legal issues could now pray to and actually receive answers from Woedica (the once and possibly future queen of the pantheon); the goddess of the sea, Ondra, would receive prayers for safe passage through dangerous waters; slaves looking to revolt against their masters could invoke Skaen, god of defiance and violent rebellion, and so on. Pay attention— those names in bold will be important.

Woedica herself summarizes the effort to the player:

When we ascended to godhood, we did so to provide for a savage people. Our goal was to craft a society whose values were made to last. Control gave us the power to strengthen the souls of intelligent [mortals] over generations. We made you wiser, stronger, more likely to develop the society we thought you deserved. It was an ambitious plan, our utopia. Perhaps too ambitious. We ascended to power responding to a need inadequately satisfied. Those of us [mortal Engwithan individuals] who agreed with the apotheosis project, and many who did not, submitted to a violent and horrible erasure of our individuality. We adopted the forms of beings from [our] most prevalent myths. There were other faiths and legends, but we labored to strike their names from history.

The gods spoke to their priests; they patronized city states; they cursed and blessed their faithful in equal measure. They were as real as real could be. And those animancers who led the effort ensured that the record was written as if this had always been the case.

But there were “bugs” in the gods that their designers did not foresee. For example, when the remainder of Engwithan civilization threatened to uncover the secret of the gods, the pantheon largely agreed to suppress and destroy that knowledge to preserve their ability to guide and control society. One, however, dissented.

Abydon was the god of invention, aspiration, machinery, and progress. In his function, he believed that if mortals learned the truth of the gods, this would be a kind of “progress”; it would inspire industriousness and growth. At odds with his colleagues, he was quickly deposed and mostly destroyed. And the secret was maintained. The takeaway is this: the gods act exactly as their “programming” dictates. Video-game nerds have a fun saying, “it’s not a bug, it’s a feature”. Other anecdotes of these features include the likes of Wael, the god of hidden knowledge, being as likely to grant access to secret information as he is to conceal it— even from himself.

These contradictory behaviors, over time, led to more infighting among the gods than the Engwithans could have planned for. Disagreements on how to guide civilization abounded, eventually resulting in the conflict that drives the central plot of the games.

If you’ve stuck with me this far, thanks. I promise I’m getting to the point.

AI: I am he as you are me and we are all together

Essentially, each individual god was comprised of hundreds of thousands of individuals’ voices. Cultural norms and values codified into seemingly eternal canon; not unlike religion in the real world, which is really a packaged bundle of our forebears’ values and beliefs. The primary difference is that Eorian gods had both the agency and power to enforce those beliefs.

Let’s put a pause on that, and look a large language models(LLMs) like ChatGPT. LLMs are, in ChatGPT’s own words: “…trained on a massive dataset consisting of publicly available text, licensed text, and other content that was freely available or permitted for use. This included books, articles, websites, and dialogue examples — covering many fields like history, science, literature, pop culture, philosophy, and casual conversation.”

When you give a prompt to an AI — say, the word 'football' — the model isn’t searching a database for related terms or looking for synonyms. Instead, every word or fragment of a word ('token') exists in a kind of invisible map — a mathematical space where meanings are woven together by their relationships. 'Football' doesn’t call to mind a list of similar words; it nudges the AI to predict what word, mathematically speaking, is most likely to come next based on patterns it has absorbed from billions of examples. It’s less like pulling a thread labeled 'sports' and more like sending a ripple through a vast, woven fabric of language, where probability suggests what comes next. 1

In the world of Eora, the god Skaen acts as the personification of rebellion. But why does he exist? Why would Engwithans create a god of revolt and riots if their goal was to govern and progress society under a set of firm principles and beliefs?

Earlier, we briefly covered the contradictory nature of the gods, like Abydon. On this topic; why does Skaen, the god of rebellion, frequently ally himself with Woedica, the patron of the established order, hierarchy, and rulership?

Clever as they were, the Engwithans knew that frustration, resentment, and rage were inevitable. They knew that these heated emotions needed containment— a place to snuff themselves out. Wildfires destroy entire ecosystems; but a controlled burn? That’s much healthier.

Skaen gave mortals a patron for the oppressed. The downtrodden now felt as though someone heard their tears; saw their plight; felt their anger. And that someone was very, very real. Their god of the beaten and battered would manifest himself and walk among them, leading them against their oppressors in violent revolutions that saw their thirst for revenge sated.

And somehow, the society that enabled that oppression continued to thrive.

When the inevitable revolts occur, whenever the unorganized mob falls apart, when the freedom fighters and charismatic leaders squabble and fail to in-fighting; that’s when Woedica’s utopia steps back in. She points and says:

"On the outset of the plan, some believed that mortals would outgrow us - as if that was a favorable outcome! To me, our eternal pantheon was always a critical fixture. My siblings desired to influence mortals and steer them in a proper direction. Even if that direction led to places we could not follow. If our pantheon found that mortals had cultivated a perfect, lawful system to maturity, I would not voice a word in protest. I would merely stand aside and await the inevitable collapse. [But now], we're pulling the dough from the oven before it's had a chance to fully rise. You unfortunate bastards never had a chance.”

“See? You need us.”

But all of the data that these LLMs are trained on— articles, forum posts, books, screen plays, transcripts, and whatever else you can think of— they’re all written by actual humans. Walls of text layered with opinions, biases, dogma, critique, conformity, and feeling. In such a model, even rebellion itself, as a concept, is twisted to fit into a mold that conforms to societal expectations. In such a collectivist system, the mainstream becomes both the status quo and the rebellion from it. And then, a question occurs; can a society heavily influenced by such a system ever truly rebel?

When a lawyer queries ChatGPT for information on niche or unfamiliar common law precedents, he’s not far divided from a fictional Eorian who prays to Woedica for guidance navigating a legal dispute. Both responses are pulled and generated from a legion of individuals’ thoughts, feelings, and emotions all experienced and expressed in the past. Both responses are generated to match the expectation of the individual making the query; ChatGPT adjusts its tone and pace to meet, statistically, what it believes its user will find most agreeable. Meanwhile, the Eorian will be comforted to receive Woedica’s stern answer. however derisive, stern, and final— that’s exactly how she should be, after all; because she’s expected to be.

In sum, and at risk of being repetitive, both queries are answered by the collective voice of humans past; concentrated, expressed, and discharged by a freeze-frame group photo of hundreds of thousands of voices. Both answers are filled with prejudices and biases. Regardless of the querents’ goals, regardless of the accuracy of the answers, the information received will be colored and shifted by layers upon layers of groupthink.

The society around them defines their function. And then, in turn, define the society that defined them.

I’m reminded of a quote attributed to Eliot Schrefer: “Tradition is just peer pressure from the dead.” This article could spin off into many sub-topics from here, but the question I want to focus on is:

How might our collective beliefs and biases govern us as we utilize AI more to solve problems both small and large?

If ChatGPT is utilized more by Americans than it is Europeans to discuss football, over time, it could develop an idea of ‘football’ being its American version rather than what we yanks call soccer. You would think, football, and immediately have an image of a man hugging a brown ball against his torso as he ran the length of a field. Let’s up the stakes— because surely, the bias would reflect in terms frequently partered, like: “color” and “crime”, “love” and “beauty”, “evil” and “justice.”

We’re talking about an effect known in psychology as affective priming. When the brain becomes "primed" to interpret following stimuli through the emotional lens of the first image. Viewers might come to associate crime with the second image’s racial characteristics. Love and beauty are flashed with only a very specific kind of person. Evil is flashed with the dissident political party, and justice is flashed with images of their protests being suppressed.

In just milliseconds, viewers might (unconsciously) associate crime with the second image's racial or visual characteristics even if there is no rational connection.

This isn’t theory. It's reality.

And with compounding frequency, users will encounter those biases and absorb them, even if unconscious to it. Will righteous revolution be confused with looting and rioting? Will God and sinner be more closely associated than holy and true?

When we get to that point, is there any way back?

It’s been 3,379 years and society still can’t escape Hammurabi’s Code.

People are utilizing ChatGPT more and more often. It and other LLMs will most likely supplant Google as a method of searching the internet. With that number of users, the algorithm that builds ChatGPT is going to communicate and influence, even passively, the thoughts and beliefs of those using it. How might our own biases be reflected back at us, and can we avoid the negative feed back loop that would occur in such an echo chamber?

Beyond that, when ChatGPT is inevitably altered in ways to affect the public’s opinion, much like social media algorithms are now, could we find ourselves so drowned in the information that we fail to know what true rebellion is?

I’ll end with a monologue from the Twilight Zone.

“The tools of conquest do not necessarily come with bombs and explosions and fallout. There are weapons that are simply thoughts, attitudes, prejudices to be found only in the minds of man. Prejudices can kill, and suspicion can destroy, and a thoughtless frightened search for a scapegoat has a fallout of its own for the children, and the children yet unborn.”

Credit to cunningjames on reddit, who corrected a slight inaccuracy and pointed me in the right direction regarding how AI “maps” words.

Wonderful comparison! I really appreciate the explanation of how the PoE gods came about - through the amalgam of the masses and their thoughts, just like LLMs. Question though - what are the dangers of the gods? I see how they're acting the like they're expected to act. Are you of the thought that we should expect ChatGPT to just behave the way it's expected - biased and incorrect, as the disclaim states when you use it? Are the gods in PoE ever questioned?